Image credits: Google Deep Mind

AI models that play games go back decades, but they are usually specialized in one game and always play to win. Google DeepMind researchers have a different goal with their latest work. It's a model that has learned to play multiple 3D games just like humans, but it's also a model that understands verbal instructions and does its best to act accordingly.

Of course, there are “AI” or computer characters that can do this sort of thing, but they are more like NPCs that can be controlled indirectly using game functions, i.e. formal in-game commands. Masu.

DeepMind's SIMA (Scalable Commandable Multi-World Agent) has no access to the game's internal code or rules. Instead, it was trained on hours of video showing human gameplay. From this data and the annotations provided by the data labeler, the model learns to associate specific visual representations of actions, objects, and interactions. We also recorded videos of players instructing each other on what to do in-game.

For example, from how pixels move in a certain pattern on the screen, we learn that this is an action called “moving forward,” or when a character approaches an object like a door and uses an object like a doorknob. If so, it may learn that it is “open”. door. “It's something simple, like a task or event that takes a few seconds, but it's more than just pressing a key or identifying something.

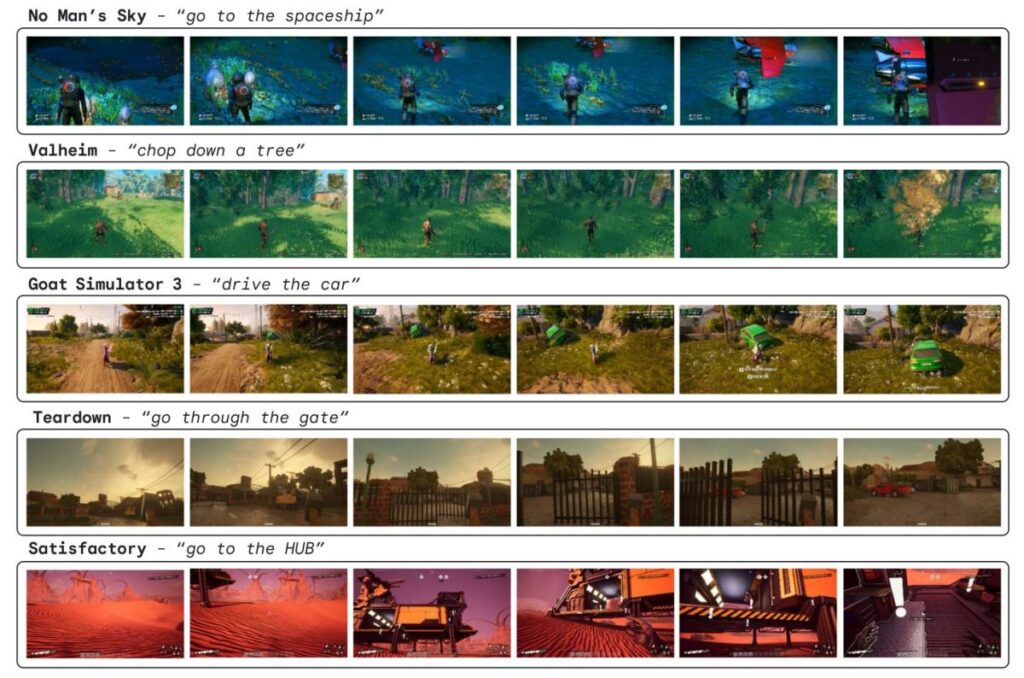

Training videos have been filmed for multiple games, from Valheim to Goat Simulator 3, and the developers are involved and consent to this use of their software. Researchers said in a conference call with the press that one of their main goals is to train the AI to play a set of games, so that it can play other games it hasn't seen yet. He said that this is a process called generalization.

The answer is yes, but there are caveats. AI agents trained on multiple games performed better on games with which they had no prior experience. But of course, many games have specific and unique mechanics and terminology that can thwart the best-prepared AI. However, there is nothing preventing the model from learning these things other than the lack of training data.

One reason for this is that while there are a lot of in-game terms, there are actually only a limited number of “verbs” that players can use to actually affect the game world. When you set up a lean-to, pitch a tent, or summon a magical shelter, you're really “building a house,” right? Therefore, this map of dozens of primitives that the agent currently knows is very interesting to peruse.

A map of dozens of actions that SIMA recognizes and can perform or combine. Image credits: Google Deep Mind

In addition to fundamentally advancing agent-based AI, the researchers' ambition is to create more natural gameplay companions than today's rigid, hard-coded ones.

“Instead of playing against superhuman agents, you can have a SIMA player by your side who is supportive and can give you direction,” said Tim Hurley, one of the project's leaders.

When they play, all they see are pixels on the game screen, so they have to learn how to do things much the same way we do. But it also means they can adapt and create new behaviors.

You might be interested in how this stacks up with the simulator approach, which is a common way to create agentic AI. In a simulator approach, a largely unsupervised model is experimented intensely in a 3D simulated world that runs much faster than real time, allowing rules to be learned intuitively. You can then design behaviors based on them without nearly as much annotation work.

“Traditional simulator-based agent training uses reinforcement learning for training, so that the game or environment provides “reward” signals for the agent to learn (for example, wins or losses in the case of Go or StarCraft; score). Hurley pointed out to TechCrunch that this approach was used in those games and produced amazing results.

“The games we use, such as commercial games from our partners, do not have access to such reward signals. Additionally, we are interested in agents that can perform the various tasks described in free-form text. It is not practical to evaluate “reward” signals for each possible goal for each game. Instead, it trains the agent by giving it a goal in text and learning by imitation from human behavior. ”

In other words, having a rigid reward structure can limit what agents pursue. This is because if the agent is guided by the score, it will never attempt to do anything that does not maximize its value. But if you care about something more abstract, like how close the behavior is to behavior you've observed working before, then just about anything will do, as long as the training data somehow represents it. You can train to do what you want.

Other companies are also considering this kind of open-ended collaboration and creation. For example, conversations with NPCs are a highly visible opportunity for LLM-type chatbots to function. Also, in some very interesting research on agents, even simple improvisational actions and interactions are being simulated and tracked by AI.

Of course, there are also experiments with infinite games like MarioGPT, but that's a completely different matter.