RSA Conference 2024 – San Francisco – Everyone's talking about deepfakes, but most of the AI-generated synthetic media circulating today will look archaic compared to the sophistication and volume of what's coming.

kevin mandia, CEO of Google Cloud's Mandiant, says it will likely be months before the next generation of more realistic and convincing deepfake audio and video is mass-produced with AI technology. “I don't think that's the case. [deepfake content] “Enough was enough,” Mandia said in an interview with Dark Reading, adding, “We're on the cusp of a synthetic media storm that's actually going to manipulate people's hearts and minds in large quantities.” he said.

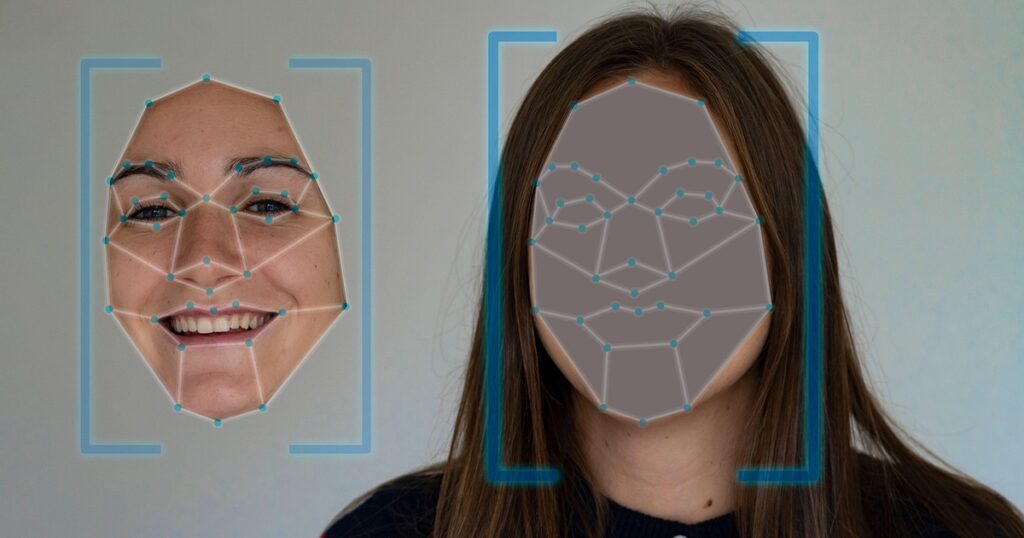

Of course, election year also plays a role. Expected boom With deep fakes. The relatively good news is that, so far, most audio and video deepfakes are very easily spotted by existing detection tools or knowledgeable humans. Voice ID security vendor Pindrop says it can identify and stop most fake audio clips, but many AI image creation tools notoriously fail to realistically represent human hands. (For example, some generate a hand with nine fingers.) image.

Security tools that detect synthetic media are beginning to emerge in the industry. That includes a tool from startup Reality Defender that detects AI-generated media. Most Innovative Startups of 2024 This week I'm participating in the RSA Conference Innovation Sandbox competition.

Source: Mandiant/Google Cloud

Mandia, an investor in a startup focused on AI-powered content fraud detection called Real Factors, said the main way to stop deepfakes from deceiving users and overshadowing authentic content is for content creators to The idea is to embed a watermark. For example, Microsoft Teams and Google Meet clients will be watermarked with immutable metadata, signed files, and digital certificates, he says.

“With the current emphasis on privacy, this trend will increase significantly,” he said. To ensure authenticity on both sides, “identity will be much better, and source provenance will be much better,” he says.

“In my opinion, this watermark reflects the policies and risk profile of each company that creates the content,” Mandia said.

Mandia warns that the next wave of AI-generated audio and video will be particularly difficult to detect as fake. “What if there's a 10-minute video and two milliseconds of it are fake? Will there ever be technology good enough to say, 'This is fake'? The infamous arms race begins. The defender will lose.'' in an arms race. ”

Make cyber criminals pay

Mandia says that because cyber-attacks are becoming more expensive overall for victim organizations, both financially and reputationally, reversing the equation and doubling down on attribution sharing and naming can help make attacks more effective. The time has come to increase the risk for themselves.

“We've actually gotten pretty good at threat intelligence, but we're bad at attributing threat intelligence,” he says. A model that continually burdens organizations to strengthen their defenses will not work. “We're putting costs on the wrong side of the hose,” he says.

Mandia believes it's time to reconsider cybercriminal safe haven agreements, focus on blaming the individuals behind the keyboards, and sharing attack attribution data.Sanction and name prolific leaders LockBit Ransomware Group He said he was arrested this week by international law enforcement. Officials from Australia, Europe and the United States have worked together to impose sanctions on Russian national Dmitry Yuryevich, 31, of Voronezh, Russia, for his role as the leader of a cybercrime organization. They offered a $10 million reward for information about him and published his photo, a move Mandia praised as a sound strategy to increase the risk of bad actors.

“I think it's important. If you're a criminal and all of a sudden your photo is published all over the world, that's a problem for you. It's a deterrent, much more than 'raising the cost' to the attacker.” “It's a big deterrent,” Mandia argues.

He said law enforcement, governments and private industry need to reconsider how they begin to effectively identify cybercriminals, adding that a major challenge in unmasking is the privacy laws and regulations of each country. It points out that it is a civil rights law. “We have to start addressing this issue without affecting civil liberties,” he says.