eSecurity Planet's content and product recommendations are editorially independent. When you click on links to our partners, we may earn money. learn more.

ShadowRay is the Ray Artificial Intelligence (AI) Framework Infrastructure Exposure. This exposure has come under active attack, but Ray disputes that the exposure is a vulnerability and is not intended to be fixed. A dispute between Ray's developers and security researchers highlights hidden assumptions, and through understanding ShadowRay he provides lessons on AI security, internet-exposed assets, and vulnerability scanning.

Description of ShadowRay

AI computing platform Anyscale has developed the open source Ray AI framework, which is primarily used to manage AI workloads. The tool includes a customer list that includes DoorDash, LinkedIn, Netflix, OpenAI, Uber, and more.

Security researchers at Oligo Security have discovered CVE-2023-48022, known as ShadowRay. This shows that Ray fails to apply authorization in his Jobs API. This vulnerability allows an unauthenticated user with access to the Dashboard network to launch jobs or execute arbitrary code on the host.

Researchers calculate that this vulnerability scores 9.8 (out of 10) using the Common Vulnerability Scoring System (CVSS), but Anyscale denies that this vulnerability is a vulnerability. Masu. Instead, they claim that Ray is only intended to be used within a managed environment and that the lack of authentication is an intended feature.

shadow ray damage

Unfortunately, many customers don't seem to understand Anyscale's assumption that these environments will not be exposed to the internet. Oligo has already detected hundreds of public servers that have been compromised by attackers and categorizes the types of compromises as follows:

- Accessed SSH key: Allows an attacker to connect to other virtual machines in the hosting environment to gain persistence and reuse computing power.

- Excessive access: Embedded API admin privileges provide attackers with access to your cloud environment via Ray root access or Kubernetes clusters.

- Compromised AI workload: It can affect the integrity of AI model results, enable model theft, and infect model training to alter future results.

- Hijacked computing: Repurpose expensive AI computing power for attacker needs. It is primarily cryptojacking, which involves mining cryptocurrencies using stolen resources.

- Stolen credentials: Exposure of passwords for OpenAI, Slack, Stripe, internal databases, AI databases, etc. can potentially compromise other resources.

- Seized tokens: Grants access for attackers to steal funds (Stripe), perform AI supply chain attacks (OpenAI, Hugging Face, etc.), or intercept internal communications (Slack).

If you haven't ensured that your internal Ray resources reside securely behind strict network security controls, run the Anyscale tool now to find your publicly available resources.

ShadowRay Indirect Lesson

While the direct cost to victims is significant, ShadowRay exposes hidden network security assumptions that have been overlooked in the rush to the cloud and AI adoption. Let's examine these assumptions in the context of AI security, internet-exposed resources, and vulnerability scanning.

AI security lessons

In the rush to leverage AI's perceived capabilities, companies will hand over the effort to AI experts, who will naturally focus on their primary objective: retrieving the results of their AI models. Inherently shortsighted, companies are ignoring three major hidden problems revealed by ShadowRay. This means AI professionals lack security expertise, AI data requires encryption, and AI models require source tracking.

AI experts lack security expertise

Just as AI researchers assume that Ray is safe, Anyscale assumes that the environment is safe. Neil Carpenter, field CTO at Orca Security, said: It's unfortunate that the author isn't making an effort to address this his CVE, but instead disputes that it's by design. ”

Anyscale's response compared to Ray's actual usage highlights the lack of security mindset among AI experts. Hundreds of exposed servers mean many organizations need to add security to their AI teams or include security monitoring in their operations. Companies that assume secure systems are exposed to data compliance violations and other harm.

AI data requires encryption

Attackers easily discover and identify unencrypted sensitive information, especially data that Oligo researchers refer to as “models or datasets.” [that] is the unique, non-public intellectual property that differentiates a company from its competitors. ”

AI data is a single point of failure for data breaches and trade secret leaks, but organizations with multi-million dollar budgets for AI research are spending less on the associated security needed to protect their AI data. is ignored. Fortunately, application layer encryption (ALE) and other types of modern encryption solutions can be purchased to add protection to internal or external AI data modeling.

AI models require source tracking

As AI models digest information for modeling purposes, AI programmers assume that all data is good data and that “garbage in garbage” never applies to AI. Unfortunately, if an attacker can inject fake external data into the training data set, the model will be affected, if not completely distorted. However, challenges remain in AI data protection.

“This is a rapidly evolving field,” Carpenter acknowledges. “However…existing controls can help protect against future attacks on AI training materials. For example, the first line of defense may include restricting access at both the identity and network layers and Securing a supply chain, including AI training, starts in the same way as securing any other software supply chain: a strong foundation.”

While traditional defenses are useful for internal AI data sources, they become exponentially more complex when incorporating data sources outside the organization. Carpenter suggests that additional consideration is needed for third-party data to avoid issues such as “malicious contamination of data, piracy, and implicit bias.” Data scrubbing to avoid these issues should be performed before adding data to the server for training AI models.

Perhaps some researchers believe that all the results, including the AI's hallucinations, are rational. However, fictitious or corrupted results can be misleading to those trying to apply the results to the real world. Incentivizing tracking the reliability, validity, and appropriate use of data that impacts AI requires applying a healthy dose of cynicism to the process.

Internet published resource lessons

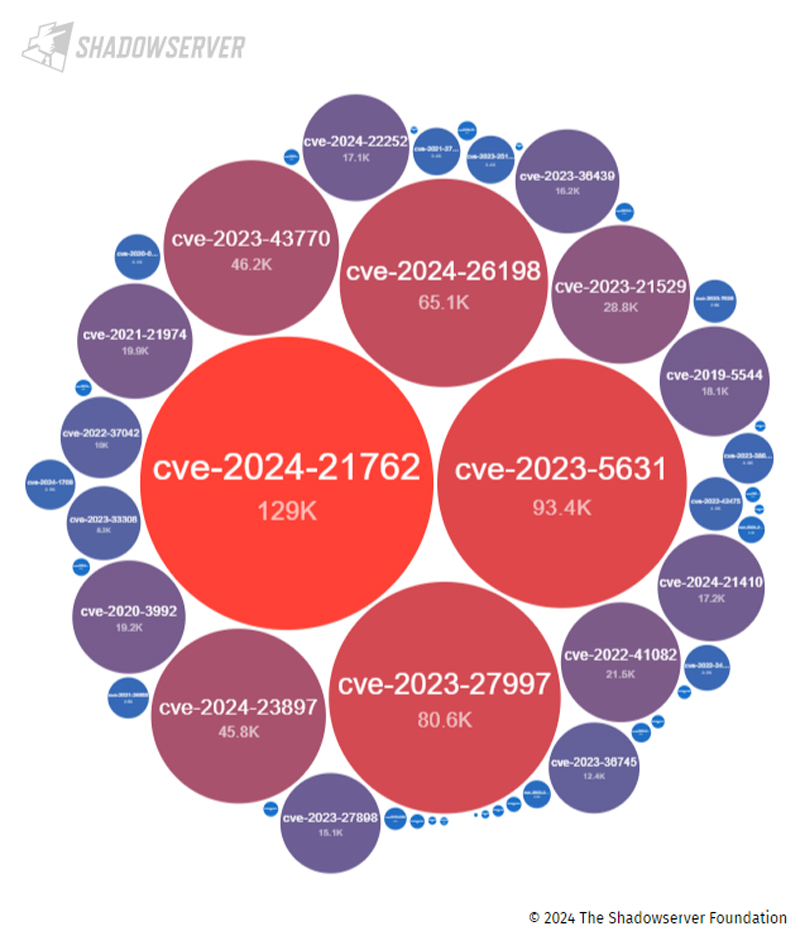

ShadowRay poses a problem because the AI team has exposed its infrastructure to public access. However, many other companies leave accessible resources on the Internet that can be exploited to create serious security vulnerabilities. For example, the image below shows hundreds of thousands of Internet-accessible IP addresses with critical-level vulnerabilities discovered by the Shadowserver Foundation.

Searching for vulnerabilities at any level reveals millions of potential issues, but does not include controversial CVEs such as ShadowRay and other misconfigured and accessible infrastructure. Is not … Leverage cloud-native application protection (CNAP) platforms and cloud resource vulnerability scanners to detect exposed vulnerabilities.

Unfortunately, scanning requires AI development teams and other teams deploying resources to submit resources to security for tracking or scanning. While AI teams may stand up resources independently for budgeting and rapid deployment purposes, they need to make their presence known to security teams to apply cloud security best practices to their infrastructure.

Vulnerability scanning lesson

Anyscale's CVE-2023-48022 dispute puts this vulnerability in the gray zone like many other disputed CVE vulnerabilities. These range from issues that are not yet proven and may not be effective, to issues where the product simply works as intended but is not secure (such as ShadowRay).

These disputed vulnerabilities are worth tracking through a vulnerability management tool or risk management program. Additionally, two important lessons revealed by ShadowRay are worth paying special attention to. First, vulnerability scanning tools differ in how they handle disputed vulnerabilities. Second, these vulnerabilities require active tracking and verification.

Be aware of differences in handling of disputed vulnerabilities

Different vulnerability scanners and threat feeds handle disputed vulnerabilities differently. Some may omit disputed vulnerabilities, some may include them as optional scans, and others may include them as different types of issues.

For example, Carpenter reveals: “Orca has taken the approach of addressing this as a posture risk rather than a CVE-style vulnerability. This is a more user-friendly approach for organizations because CVEs are typically addressed with updates (updates are not (available here) However, postural risks are addressed through configuration changes (which is the correct approach to this problem), allowing IT teams to determine how a particular tool handles a particular vulnerability. must be actively tracked.

Actively track and verify vulnerabilities

Tools promise to make the process easier, but unfortunately there is still no button to make security easy. When it comes to vulnerability scanners, it is not clear whether existing tools scan for specific vulnerabilities.

Security teams must actively track which vulnerabilities can impact their IT environment and ensure that their tools are checking for the specific CVEs of concern. Disputed vulnerabilities may require additional steps, such as filing a request with the Vulnerability Scanner support team to see how the tool addresses that particular vulnerability. .

To further reduce the risk of exposure, use multiple vulnerability scanning tools and penetration tests to validate the potential risk of discovered vulnerabilities or discover additional potential issues. In the case of ShadowRay, Anyscale provided her one tool, but free open source vulnerability scanning tools also provide useful additional resources.

Conclusion: Critical vulnerabilities confirmed and reconfirmed

You don't have to be vulnerable to ShadowRay to understand the indirect lessons this issue teaches about AI risks, exposed assets, and vulnerability scanning. Although the real consequences are painful, continuous scanning of critical infrastructure for potential vulnerabilities can identify issues to resolve before attackers can cause damage.

Be aware of the limitations of employees rushing to adopt vulnerability scanning tools, AI modeling, and cloud resources. Create mechanisms for teams to collaborate to improve security and implement systems to continuously monitor potential vulnerabilities through surveys, threat feeds, vulnerability scanners, and penetration testing.

If you want to learn more about potential threats, consider reading about threat intelligence feeds.